OpenFuyao Officially Released, with Pluggable Architecture Driving the New Cloud Native Ecosystem

"Core+Extension" Lightweight Design, from Basic Orchestration to Heterogeneous Computing Power Scheduling

The openFuyao community is dedicated to building an open software ecosystem for diversified computing clusters. We focus on promoting the efficient collaboration between cloud native and AI native technologies and maximizing the utilization of effective computing power. The community edition is designed based on a modular, lightweight, secure, and reliable philosophy. It is optimized based on the open-source Kubernetes platform and provides out-of-the-box containerized cluster management capabilities. It covers basic functions such as resource orchestration, auto scaling, and multi-dimensional monitoring, meeting O&M requirements of enterprise-level production environments.

This edition adopts the "core platform+pluggable components" architecture and provides various industry-level high-value components through the built-in application market. Its key capabilities include hybrid scheduling of intelligent and general-purpose computing power, unified management of heterogeneous resources, dynamic intelligent scheduling, and enhanced end-to-end observability. For AI and big data scenarios, it integrates the computing power affinity scheduling component that significantly boost the efficiency of data-intensive tasks.

With its "lightweight core+ecosystem enablement" mode, the openFuyao community edition empowers enterprises to quickly build efficient, elastic, and intelligent computing infrastructure and simplify O&M in heterogeneous environments, providing agile support for digital transformation.

As the first version of the openFuyao community, openFuyao community edition 25.03 focuses on partners' pain points and implements joint service innovation through the community. The following section describes the key features of openFuyao 25.03 in detail.

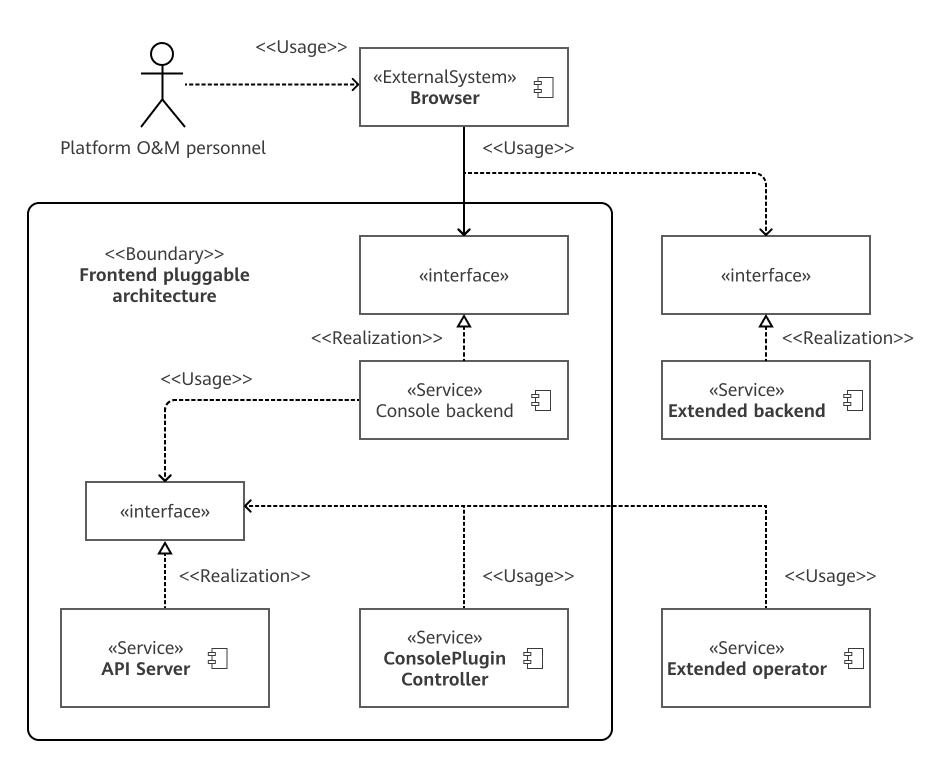

Pluggable Architecture: Custom Container Platform

The openFuyao community edition provides built-in core platform functions, such as resource orchestration, cluster management, application management, cluster observability, and user permission management. On this basis, openFuyao breaks through the rigid design of the traditional container platform and adopts a modular architecture.

- Plug-in-based capabilities: Except basic platform capabilities, other functions are implemented through extensions.

- Hot swap experience: Components can be dynamically loaded or unloaded without restarting the cluster.

- Open ecosystem: Online and local applications can be freely imported to the application market.

NUMA-Aware Scheduling: Each CPU Working in the Optimal Position

Non-Uniform Memory Access (NUMA)-aware scheduling breaks through the cross-node memory access bottleneck for computing power to implement cluster-level and node-level NUMA topology awareness. NUMA-aware scheduling for applications is performed based on NUMA affinity to improve application performance. The average throughput is improved by 30%. Moreover, the NUMA architecture reduces memory access latency, improves system performance, but increases the difficulty in system resource scheduling and management. The NUMA-aware scheduling component of openFuyao enables unified scheduler management. NUMA resource monitoring visualization aims to display the allocation and usage of NUMA resources in the system in real time on a graphical user interface (GUI). Multi-scheduler management provides a unified NUMA resource management API and supports mainstream container orchestration schedulers such as Volcano.

openFuyao Ray: One-Stop Ray Cluster Management, Improving Computing Power Utilization

Provides Ray solutions with high usability, high performance, and high computing power utilization in cloud-native scenarios. Supports full lifecycle management of Ray clusters and jobs, reduces O&M costs, enhances cluster observability, fault locating, and optimization, and implements efficient computing power scheduling and management.

NPU Operator: Automatic Deployment and Cloud Native Management

Implements minute-level automatic management of Ascend NPU hardware, including NPU hardware feature discovery as well as automatic management and installation of components such as drivers, firmware, hardware device plug-ins, metric collection, and cluster scheduling. NPUs can be deployed and available within 10 minutes.

Colocation: Cluster Resource Utilization Improved by 30% to 50%

Supports hybrid deployment of online and offline services. During peak periods of online services, resource scheduling is prioritized to guarantee online services over offline services. During off-peak periods of online services, offline services are allowed to utilize oversold resources. This improves cluster resource utilization by 30% to 50%, with minimal QoS impact and a jitter ratio of less than 5%. The complete functions of colocation will be available in version 25.06.

Multi-core High-Density Cluster Scheduling: Container Deployment Solution in High-Density Scenarios with 256 or More Cores

Multi-core high-density scenarios face challenges of surging lock contentions, unbalanced resource matching, and deployment density bottlenecks. To address these challenges, the community edition supports service type detection at the cluster layer, preventing I/O-intensive, memory-sensitive, and computing-sensitive services from being deployed on the same node. The scheduling component at the multi-core high-density cluster layer detects the multi-core topology and optimizes the pod resource allocation policy, improving the container deployment density by 10%. The complete functions will also be available in version 25.06.

Reference link: https://docs.openfuyao.cn/docs/v25.03/%E5%8F%91%E8%A1%8C%E8%AF%B4%E6%98%8E/%E7%89%88%E6%9C%AC%E4%BB%8B%E7%BB%8D

This article is first published by the openFuyao Community. Reproduction is permitted in accordance with the terms of the CC-BY-SA 4.0 License.